On the 1st-2nd of November 2018 I was lucky enough to attend the Archives Unleashed Datathon Vancouver co-hosted by the Archives Unleashed Team and Simon Fraser University Library along with KEY (SFU Big Data Initiative). I was very thankful and appreciative of the generous travel grant from the Andrew W. Mellon Foundation that made this possible.

The SFU campus at the Habour Centre was an amazing venue for the Datathon and it was nice to be able to take in some views of the surrounding mountains.

I dunno, this view from #hackarchives SFU might be the best datathon view ever… pic.twitter.com/D9gCfuF8Ux

— Ian Milligan (@ianmilligan1) November 2, 2018

About the Archives Unleashed Project

The Archives Unleashed Project is a three year project with a focus on making historical internet content easily accessible to scholars and researchers whose interests lay in exploring and researching both the recent past and contemporary history.

After a series of datathons held at a number of International institutions such as the British Library, University of Toronto, Library of Congress and the Internet Archive, the Archives Unleashed Team identified some key areas of development that would enable and help to deliver their aim of making petabytes of valuable web content accessible.

Key Areas of Development

- Better analytics tools

- Community infrastructure

- Accessible web archival interfaces

By engaging and building a community, alongside developing web archive search and data analysis tools the project is successfully enabling a wide range of people including scholars, programmers, archivists and librarians to “access, share and investigate recent history since the early days of the World Wide Web.”

The project has a three-pronged approach

- Build a software toolkit (Archives Unleashed Toolkit)

- Deploy the toolkit in a cloud-based environment (Archives Unleashed Cloud)

- Build a cohesive user community that is sustainable and inclusive by bringing together the project team members with archivists, librarians and researchers (Datathons)

Archives Unleashed Toolkit

The Archives Unleashed Toolkit (AUT) is an open-source platform for analysing web archives with Apache Spark. I was really impressed by AUT due to its scalability, relative ease of use and the huge amount of analytical options it provides. It can work on a laptop (Mac OS, Linux or Windows), a powerful cluster or on a single-node server and if you wanted to, you could even use a Raspberry Pi to run AUT. The Toolkit allows for a number of search functions across the entirety of a web archive collection. You can filter collections by domain, URL pattern, date, languages and more. Create lists of URLs to return the top ten in a collection. Extract plain text files from HTML files in the ARC or WARC file and clean the data by removing ‘boilerplate’ content such as advertisements. Its also possible to use the Stanford Named Entity Recognizer (NER) to extract names of entities, locations, organisations and persons. I’m looking forward to seeing the possibilities of how this functionality is adapted to localised instances and controlled vocabularies – would it be possible to run a similar programme for automated tagging of web archive collections in the future? Maybe ingest a collection into ATK , run a NER and automatically tag up the data providing richer metadata for web archives and subsequent research.

Archives Unleashed Cloud

The Archives Unleashed Cloud (AUK) is a GUI based front end for working with AUT, it essentially provides an accessible interface for generating research derivatives from Web archive files (WARCS). With a few clicks users can ingest and sync Archive-it collections, analyse the collections, create network graphs and visualise connections and nodes. It is currently free to use and runs on AUK central servers.

My experience at the Vancouver Datathon

The datathons bring together a small group of 15-20 people of varied professional backgrounds and experience to work and experiment with the Archives Unleashed Toolkit and the Archives Unleashed Cloud. I really like that the team have chosen to minimise the numbers that attend because it created a close knit working group that was full of collaboration, knowledge and idea exchange. It was a relaxed, fun and friendly environment to work in.

Day One

After a quick coffee and light breakfast, the Datathon opened with introductory talks from project team members Ian Milligan (Principal Investigator), Nick Ruest (Co-Principal Investigator) and Samantha Fritz (Project Manager), relating to the project – its goals and outcomes, the toolkit, available datasets and event logistics.

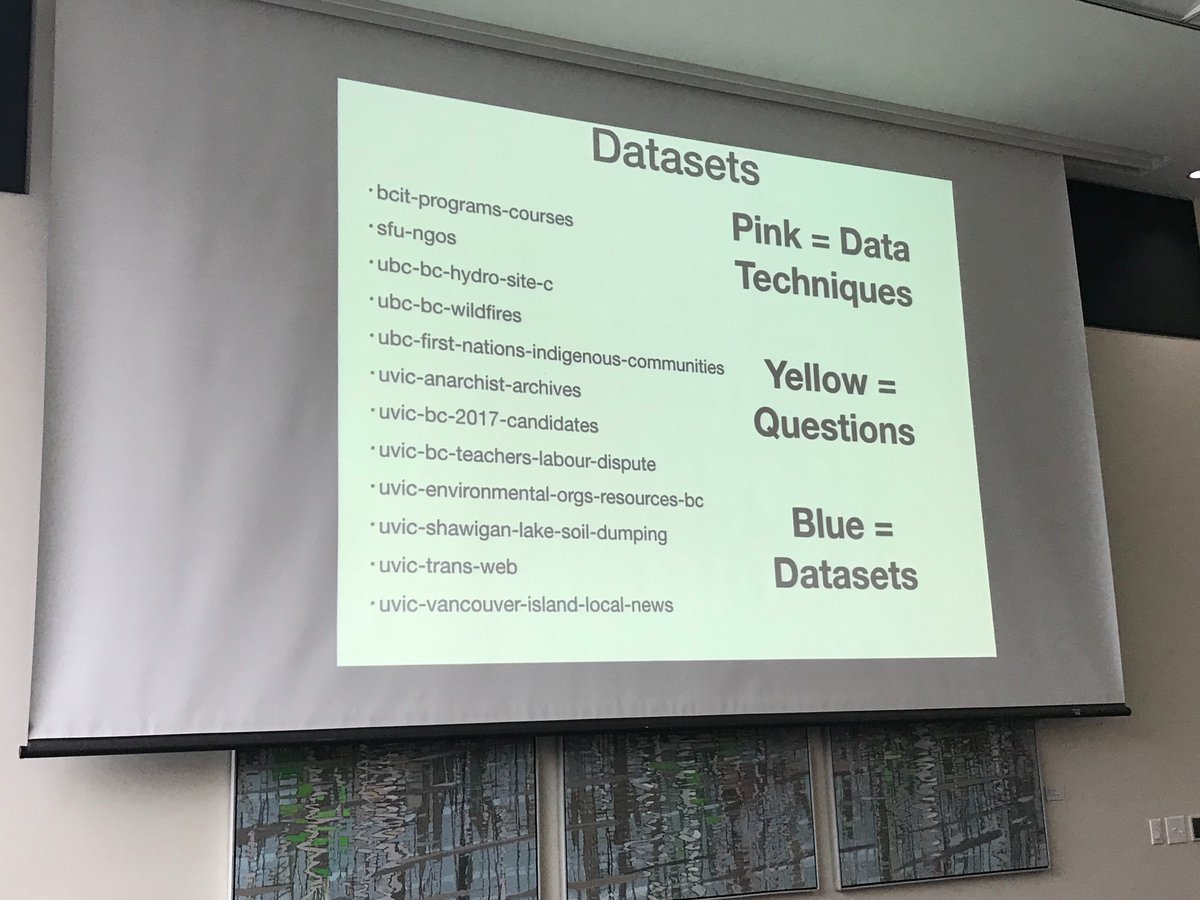

Another quick coffee break and it was back to work – participants were asked to think about the datasets that interested them, techniques they might want to use and questions or themes they would like to explore and write these on sticky notes.

Once placed on the white board, teams naturally formed around datasets, themes and questions. The team I was in consisted of Kathleen Reed and Ben O’Brien and formed around a common interest in exploring the First Nations and Indigenous communities dataset.

Group formation is always a fun part about #HackArchives! Look at all the brainstorming going on. Excited to see how teams will develop. pic.twitter.com/XXWP5TnziL

— The Archives Unleashed Project (@unleasharchives) November 1, 2018

Virtual Machines were kindly provided by Compute Canada and available for use throughout the Datathon to run AUT, datasets were preloaded onto these VMs and a number of derivative files had already been created. We spent some time brainstorming, sharing ideas and exploring datasets using a number of different tools. The day finished with some informative lightning talks about the work participants had been doing with web archives at their home institutions.

Day Two

On day two we continued to explore datasets by using the full text derivatives and running some NER and performing key word searches using the command line tool Grep. We also analysed the text using sentiment analysis with the Natural Language Toolkit. To help visualise the data, we took the new text files produced from the key word searches and uploaded them into Voyant tools. This helped by visualising links between words, creating a list of top terms and provides quantitative data such as how many times each word appears. It was here we found that the word ‘letter’ appeared quite frequently and we finalised the dataset we would be using – University of British Columbia – bc-hydro-site-c.

We hunted down the site and found it contained a number of letters from people about the BC Hydro Dam Project. The problem was that the letters were in a table and when extracted the data was not clean enough. Ben O’Brien came up with a clever extraction solution utilising the raw HTML files and some script magic. The data was then prepped for geocoding by Kathleen Reed to show the geographical spread of the letter writers, hot-spots and timeline, a useful way of looking at the issue from the perspective of engagement and the community.

Time Lapse of locations of letter writers.

At the end of day 2 each team had a chance to present their project to the other teams. You can view the presentation (Exploring Letters of protest for the BC Hydro Dam Site C) we prepared here, as well as the other team projects.

Why Web Archives Matter

How we preserve, collect, share and exchange cultural information has changed dramatically. The act of remembering at National Institutes and Libraries has altered greatly in terms of scope, speed and scale due to the web. The way in which we provide access to, use and engage with archival material has been disrupted. All current and future historians who want to study the periods after the 1990s will have to use web archives as a resource. Currently issues around accessibility and usability have lagged behind and many students and historians are not ready. Projects like Archives Unleashed will help to furnish and equip researchers, historians, students and the community with the necessary tools to combat these problems. I look forward to seeing the next steps the project takes.

Archives Unleashed are currently accepted submissions for the next Datathon in March 2019, I highly recommend it.