Having been awarded the Diversity Bursary for BME individuals, sponsored by Kevin J Bolton Ltd., I was able to attend the ARA Annual Conference 2018 held in Glasgow in August.

Capitalising on the host city’s existing ubiquitous branding of People Make Glasgow, the Conference Committee set People Make Records as this year’s conference theme. This was then divided into three individual themes, one for each day of the conference:

- People in Records

- People Using Records

- People Looking After Records

Examined through the lens of the above themes over the course of three days, this year’s conference addressed three keys areas within the sector: representation, diversity and engagement.

Following an introduction from Kevin Bolton (@kevjbolton), the conference kicked off with Professor Gus John (@Gus_John) delivering the opening keynote address, entitled “Choices of the Living and the Dead”. With People Make Records the theme for the day, Professor John gave a powerful talk discussing how people are impacting the records and recordkeeping of African (and other) diaspora in the UK, enabling the airbrushing of the history of oppressed communities. Professor John noted yes people make records, but we also determine what to record, and what to do with it once it has been recorded.

Noting the ignorance surrounding racial prejudice and violence, citing the Notting Hill race riots, the Windrush generation, and Stephen Lawrence as examples, Professor John illustrated how the commemoration of historical events is selective: while in 2018 the 50th anniversary of the Race Relations Act received much attention, in comparison the 500th anniversary of the start of the Transatlantic Slave Trade was largely ignored, by the sector and the media alike. This culture of oppression, and omission, he said, is leading to ignorance amongst young people about major defining events, contributing to a removal of context to historically oppressed groups.

In response to questions from the audience, Professor John noted that one of the problems facing the sector is the failure to interrogate the ‘business as usual’ climate, and that it may be ‘too difficult to consider what an alternative route might be’. Professor John challenged us to question the status quo: ‘Why is my curriculum white? Why isn’t my lecturer black? What does “de-colonising” the curriculum mean? This is what we must ask ourselves’.

Following Professor John’s keynote and his ultimate call to action, there was a palpable atmosphere of engagement amongst the delegates, with myself and those around me eager to spend the next three days learning from the experiences of others, listening to new perspectives and extracting guidance on the actions we may take to develop and improve our sector, in terms of representation, diversity and engagement.

Various issues relating to these areas were threaded throughout many of the presentations, and as a person of colour at the start of my career in this sector, and recipient of the Diversity Bursary, I was excited to hear more about the challenges facing marginalised communities in archives and records, including some I could relate to on a personal and professional level, and, hopefully, also take away some proposed solutions and recommendations.

I attended an excellent talk by Adele Patrick (@AdelePAtrickGWL), of Glasgow Women’s Library, who discussed the place for feminism within the archive, noting GWL’s history in resistance, and insistence on a plural representation, when women’s work, past and present, is eclipsed. Dr Alan Butler (@AButlerArchive), Coordinator at Plymouth LGBT Community Archive, discussed his experiences of trying to create a sense of community within a group that is inherently quite nebulous. Nevertheless, Butler illustrated the importance of capturing LGBTQIA+ history, as people today are increasingly removed from the struggles that previous generations have had to overcome, echoing a similar point Professor Gus John made earlier.

A presentation which particularly resonated with me came from Kirsty Fife (@DIYarchivist) and Hannah Henthorn (@hanarchovist), on the issue of diversity in the workforce. Fife and Henthorn presented the findings from their research, including their survey of experiences of marginalisation in the UK archive sector, highlighting the structural barriers to diversifying the archive sector workforce. Fife and Henthorn identified several key themes which are experienced by marginalised communities in the sector, including: the feeling of isolation and otherness in both workplace and universities; difficulties in gaining qualifications, perhaps due to ill health/disability/financial barriers/other commitments; feeling unsafe and under confident in professional spaces and a frustration at the lack of diversity in leadership roles.

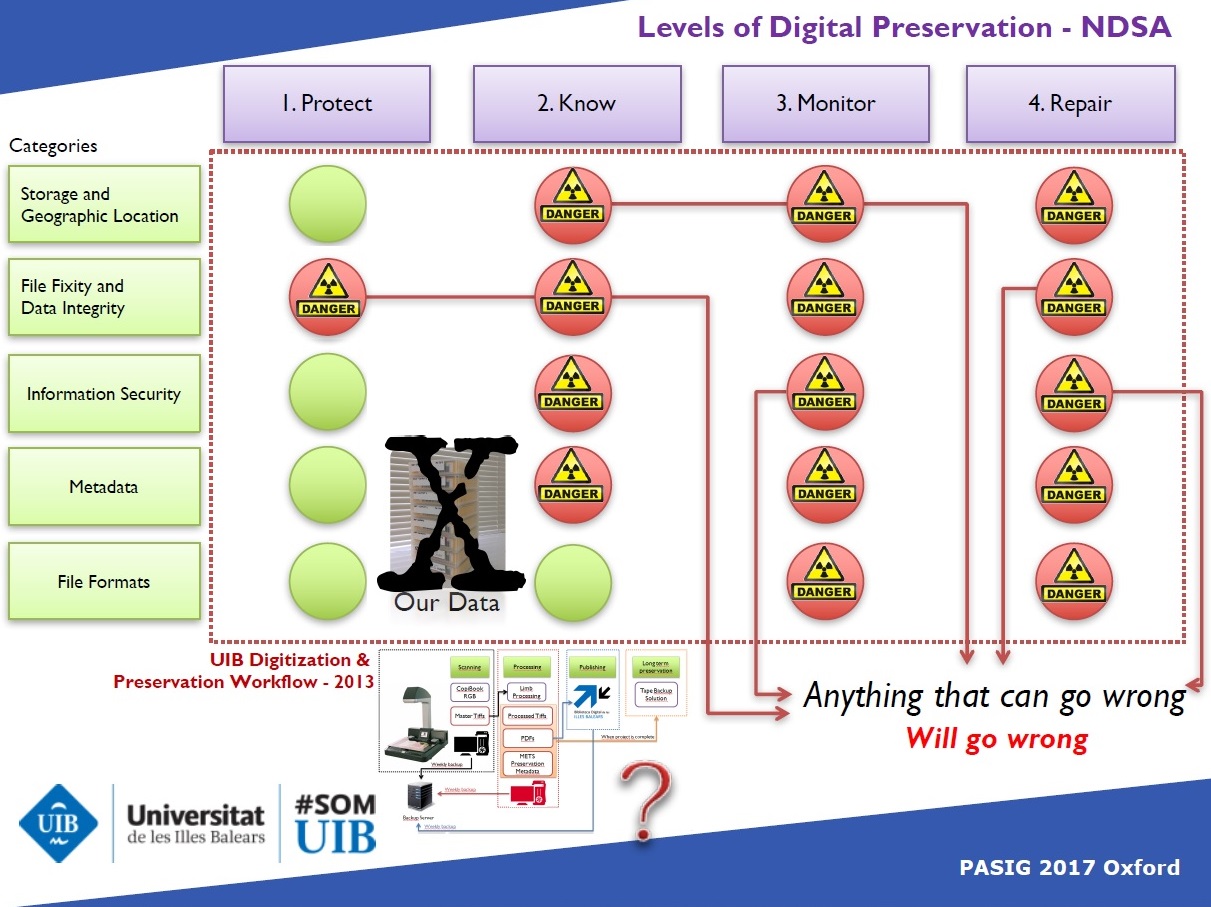

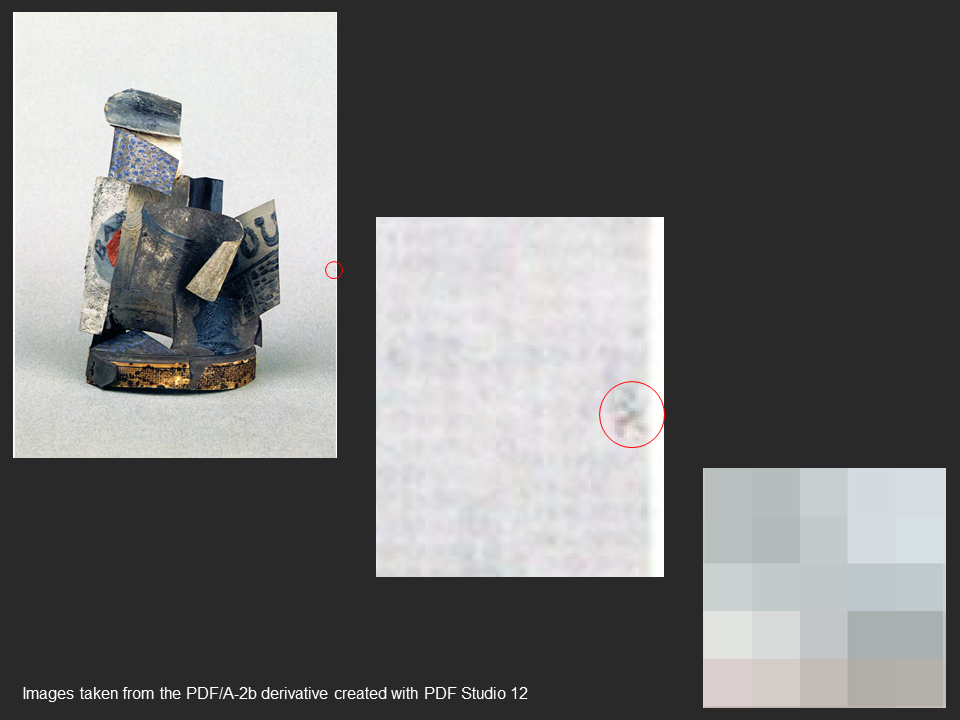

As a Graduate Trainee Digital Archivist, I couldn’t abandon my own focus on digital preservation and digital archiving, and as such attended various digital-related talks, including “Machines make records: the future of archival processing” by Jenny Bunn (@JnyBn1), discussing the impact of taking a computational approach to archival processing, “Using digital preservation and access to build a sustainable future for your archive” led by Ann Keen of Preservica, with presentations given by various Preservica users, as well as a mini-workshop led by Sarah Higgins and William Kilbride, on ethics in digital preservation, asking us to consider if we need our own code of conduct in digital preservation, and what this could look like.

William Kilbride and Sarah Higgins running their workshop “Encoding ethics: professional practice digital preservation”, ARA Annual Conference 2018, Glasgow

I have only been able to touch on a very small amount of what I heard and learnt at the many and varied talks, presentations and workshops at the ARA conference, however, one thing I took away from the conference was the realisation that archivists and recordkeepers have the power to challenge structural inequalities, and must act now, in order to become truly inclusive. As Michelle Caswell (@professorcaz), 2nd keynote speaker said, we must act with sensitivity, acknowledge our privileges and, above all empower not marginalise. This conference felt like a call to action to the archive and recordkeeping community, in order to include the ‘hard to reach’ communities, or alternatively as Adele Patrick noted, the ‘easy to ignore’. As William Kilbride (@William Kilbride) said, this is an exciting time to be in archives.

I want to thank Kevin Bolton for sponsoring the Diversity Bursary, which enabled me to attend an enriching, engaging and informative event, which otherwise would have been inaccessible for me.

________________________________________

Because every day is a school day, as homework for us all, I made a note of some of the recommendations made by speakers throughout the conference, compiled into this very brief list which I thought I would share:

Reading list

- Keys, David. (2018) Details of horrific first voyages in transatlantic slave trade revealed. 17 August 2018. The Independent.

- Cobain, Ian. (2016) The History Thieves: Secrets, Lies and the Shaping of a Modern Nation. ISBN: 9781846275852

- Eichhorn, Kate. (2013) The Archival Turn in Feminism: Outrage in Order. ISBN-13: 978-1439909515

- McIntosh, Peggy. (1988) White Privilege: Unpacking the Invisible Knapsack. Essay excerpt from ”White Privilege and Male Privilege: A Personal Account of Coming To See Correspondences through Work in Women’s Studies” Working Paper 189.

- Greene, M. A. & Meissner, D. (2005) More Product, Less Process: Revamping Traditional Archival Processing. The American Archivist: Fall/Winter 2005, Vol. 68, No. 2, pp. 208-263.

- Fife, K. & Henthorn, H. (2017) Decentring Qualification: A Radical Examination of Archival Employment Possibilities. Radical Voices Conference, 3 March 2017.

- Brilmyer, Gracen. (2016) Identifying & Dismantling White Supremacy in Archives. Poster produced in Michelle Caswell’s Archives, Records and Memory class, Fall 2016, UCLA.