Today I presented an internal seminar on RDF to the Bodleian Library developers, the first in a series of (hopefully) regular R&D meetings. This one was to provide a practical introduction to RDF to give us a baseline to build from when we start building models of our content (at least one Library project requires we generate RDF). I called it “what have the Romans ever Done For us?”:

The title is a line from the Monty Python film “The Life of Brian” and I chose it not just because I’ve been looking into Python (the language) but also because I can imagine a future where people ask “What has RDF ever done for us?” in a disgruntled way. In the film people suggest the Romans did quite a lot – bits of public infrastructure, like aqueducts and roads, alongside useful services like wine and medicine. I think RDF is a bit like that. Done well, it creates a solid infrastructure from which useful services will be built, but it is also likely to invisible, if not taken for granted, like sanitation and HTML. 🙂

The seminar itself seemed to go well – though you’d have to ask the attendees rather than the presenter to get the real story! We started with some slides that outlines the basics of RDF, using Dean Allemang & Jim Hendler’s nice method of distributing responsibility for tablular data and ending up at RDF (see pg. 32 onwards in the book Semantic Web for the Working Ontologist), and leapt straight in with LIBRIS (for example Neverwhere as RDF) as a case study. In the resultant discussion we looked at notation, RDFS, and linked data.

The final half of the seminar was a workshop in which we split into two groups: data providers and data consumers, and then considered what resources at the Bodleian might be suitable for publication as RDF (and linked data) and what services we might build using data from elsewhere.

The data providers discussed how there was probably quite a lot of resources in the Library that we could publish, or become the authority on – members of the University for example, or any of the many wonders we have in the collections. To make this manageable, it was felt it would be sensible to break this task up, probably by project. This would also allow for specific models to be identified and/or developed for each set of resources.

There was some concern among the providers regarding how to “sell” the benefits of RDF and linked data to management. The concerns paralleled what I imagine happened with the emergent Web. Is this kind of data publication giving away valuable information assets, for little or no return? At worst this leads people away from the Library to viewing and using information via aggregation services. Of course, there is a flip side to this argument. Serendipitous discovery of a Bodleian resource via a third-party is essentially free advertising and may drive users through our (virtual or real) door?

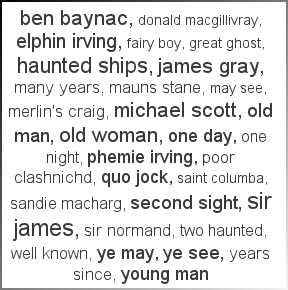

The consumers seemed realise early on that one of the big problems was the lack of usable data. Indeed, for the talk, I scoured datasets trying to find a decent match of data to augment Library catalogues and found it quite hard. That isn’t to say there is not a lot of data available (though of course there could be more), it is just without an application for it, shoehorning data into a novel use remains a novelty item. However, one possible example was the Legislation API. The group suggested that reviews from other sites could be used to augment Library catalogue results. They also suggested that the people data the Library published could be very useful and Monica talked about a suggestion she had heard at Dev8D for a developer expertise database (data Web?).

All in all lots of very useful discussion and I hope everyone went away with a good idea of what RDF was and what it might do for us and what we might do with it. There (justifiably) remains some scepticism, mostly because without the Web, linked data is simply data and we’ve all got our own neat ways to handle data already, be it a SQL database, XML & Solr, or whatever. Without the Web of Data the question “What do we gain?” remains.

It is a bit chicken and egg and the answer will eventually become clear as more and more people create machine processable data on the Web.

For the next meeting we’ll be modelling people. I’m going to bring the clay! 🙂

Slides and worksheets are available with the source open office documents also published on this (non-RDF) page!

-Peter Cliff