As some of you may know, since 2011 the Bodleian has been archiving websites, which are collected in the Bodleian Libraries Web Archive (BLWA) and made publicly accessible through the platform Archive-it. BLWA is thematically organised into seven collections: Arts and Humanities; Social Sciences; Science, Technology and Medicine; International; Oxford University Colleges; Oxford Student Societies and Oxford GLAM. As their names already suggest, much of the online content we collect relates to Oxford University and seeks to provide a snapshot of its intellectual, cultural and academic life as well as to document the University’s main administrative functions.

From the very beginning, the BLWA collection has also been regarded as a complement to and reflection of the Bodleian’s analogue special collections that users can consult in the reading rooms. For example, there are multiple meaningful links between our BLWA Arts & Humanities collection and the Bodleian’s Modern Archives & Manuscripts. By teasing out the connections between them, I hope to offer some concrete examples of how archived websites can be valuable to historical and cultural research and explore some of the reasons why the BLWA can be seen as integral to the Bodleian Special Collections.

Collecting author appreciation society websites…

In BLWA, you can find websites of societies dedicated to the study of famous authors whose papers are kept at the Bodleian (partly or in full), such as T.S. Eliot, J. R.R. Tolkien and Evelyn Waugh. An example from this category is The Philip Larkin Society website, which complements the holdings of correspondence to and from the poet and librarian Philip Larkin (1922-1985) held at the Bodleian.

The website provides helpful information to anyone with a general or academic interest in Larkin, as it lists talks and events about the poet as well as relevant publications and online resources promoted by the Society.

A 2018 capture in BLWA of a webpage from the Larkin Society website, describing a public art project celebrating Larkin’s famous poem ‘Toads’

The value of the archived version of The Philip Larkin Society website may not be immediately apparent now, when the live site is still active. However, in decades from now, this website may well become a primary source that offers a window onto how early 21st century society engaged with English poetry and disseminated research about the topic through media and formats distinctive of our time, such as online reviews, podcasts and blog posts.

…and social media accounts

Alongside websites, BLWA has been actively collecting Twitter accounts pertaining to authors and artists, such as The Barbara Pym Society Twitter presence.

The Twitter feed preserves the memory of ephemeral, but meaningful encounters and forms of engagement with the works of English novelist Barbara Pym (1913-1980). The experience of consulting the Archive of English Novelist Barbara Pym in the Weston Reading rooms is enriched by the possibility of reading through the posts on the Pym Twitter account. From talks about Pym’s work to quotes in newspaper articles mentioning the author, the Twitter feed is not only a collection of news and information about Barbara Pym’s work, but also a representation of the lively network of individuals engaging with her writings, both in academic and broader circles.

Online presence of contemporary artists

Building an online presence through social media and a personal website is a promotional strategy that many contemporary artists and authors have adopted. A good example of this is the website of the British photographer and documentarist Daniel Meadows (b. 1952). In 2019, BLWA started taking regular captures of Meadows’ website, Photobus, following the acquisition of Meadows’ Archive a year earlier. This hybrid archive (which includes both analogue and born-digital items) has since been catalogued and its finding aid is available here.

The captures taken of Meadows’ Photobus site provide us with contextual information on the photographic series described in the finding aid of Meadows’ Archive at the Bodleian. Through the website, we get an account of Meadows’ life in his own words, we learn about the exhibitions where Meadows’ photographs were displayed and find out about the books in which his work has been published.

If you were to search for Daniel Meadows’ website on the live web right now, you would find that the website is still active, but looks rather different in content and layout from the captures archived in the BLWA between 2019 and March 2023.

Comparison of the ‘About’ page on Daniel Meadows’ website: the BLWA capture from January 2023 (top), and the capture from May 2023 (bottom)

Furthermore, the URL has changed from Photobus to the name of the photographer himself. Were it not for the version of the website archived in BLWA, the old content and structure of the site would not be as easily accessible. The website has also changed in scope, as it now provides us with a comprehensive digital repository of Meadows’ photographic series.

Comparing Meadows’ website in BLWA with his archive at the Bodleian, we can see an interesting series of correspondences between digital and analogue realm, and between digital and physical archives. For example, the archived version of Meadows’ website Photobus is included as a link in the section of the finding aid for the Meadows archive devoted to ‘related materials’. In turn, the updated, 2023 version of Meadows’ site reflects in some respects the organisation and structure of an archive: his oeuvre is tidily arranged into series, each accompanied by a description and digital images of the photographs to match their arrangement in the physical archive at the Bodleian. Daniel Meadows’ new website exemplifies how, through the combination of metadata and high-resolution images, websites can become a powerful interface through which an archive is discovered and its contents accessed in ways that complement and enhance the experience of working through an archival box in a reading room.

Archived websites as a link to tomorrow’s archives

Web archives are a relatively recent phenomenon, so the uses of a collection of archived websites like the BLWA are only gradually beginning to emerge. The historical, cultural and evidential value of web archives is still overlooked, or perhaps just not yet fully exploited. It is only a matter of time before social media and websites like those kept in BLWA will be seen as an increasingly important resource on the cultural significance of 20th and 21st century authors and artists and the reception of their work. After all, for today’s authors and artists, social media and websites are an important vehicle for the dissemination of news about their work, of their opinions and creativity. As such, their online presence may be different in form, but similar in purpose and significance to the letters, pamphlets, alba amicorum and diaries that one would consult to research the social interactions, ideas, and activities of a humanist scholar.

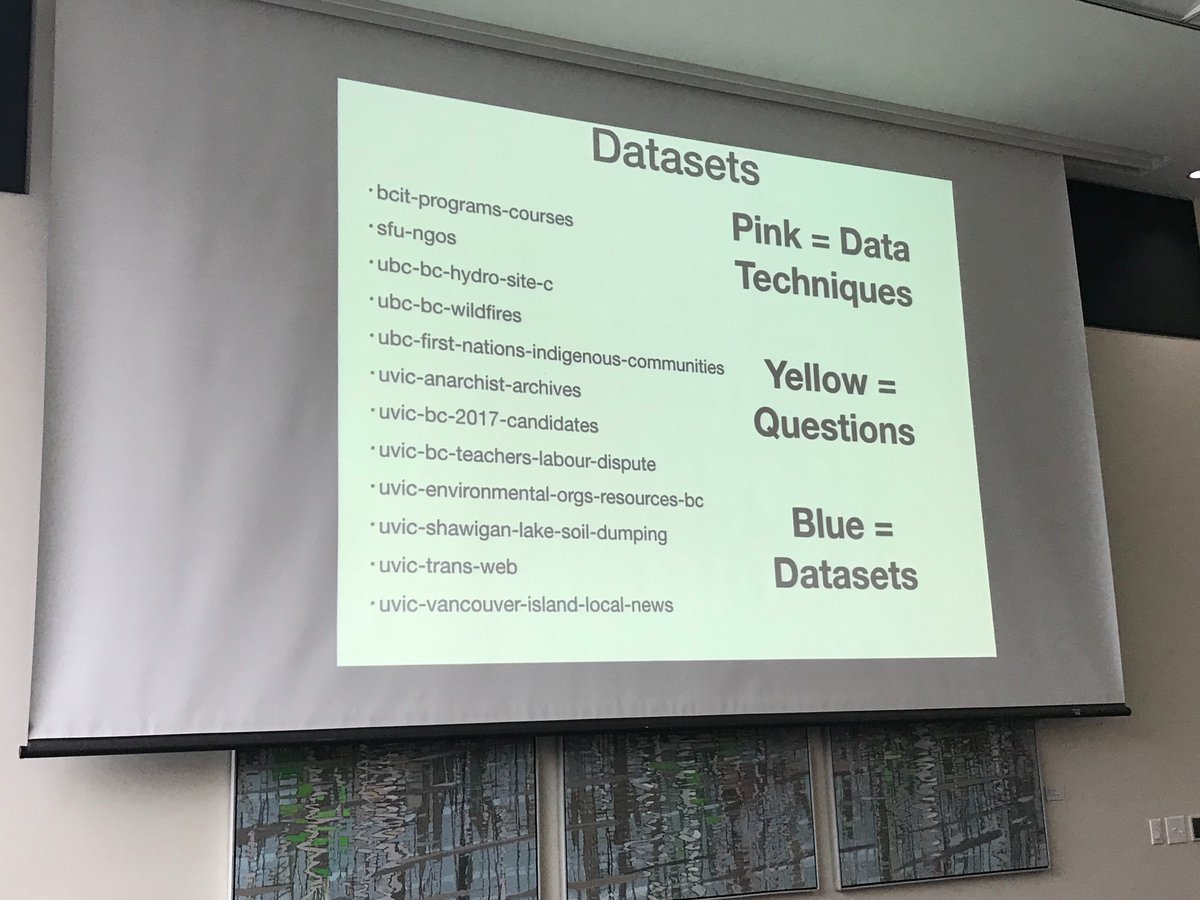

One of the exciting aspects of working with digital archives is the proactive nature of our collecting practice. Curators of digital collections need to identify, select and collect relevant content before it disappears or decay – threats to which websites and social media are vulnerable. Through the choices we make today of content to archive, we are ultimately shaping the digital archives that will be accessible decades from now.

We are happy to consider suggestions from our users about websites that could be suitable additions to the collection. If you are curious to explore the BLWA collection further, you can find it here. The online nomination form can be found at this link. So don’t just follow the links – help us save them!